Explaining the inner workings of Artificial Intelligence to those unfamiliar with the concepts can be a challenging task. These individuals are often intelligent and discerning, so any vague or unclear explanations will be met with scrutiny. Whether seeking funding, proposing special projects, or warning of potential danger, it is important to find a way to effectively communicate without getting lost in the complexity and jargon of Large Language Models (LLMs).

How about using this analogy, which I call "the jigsaw"?

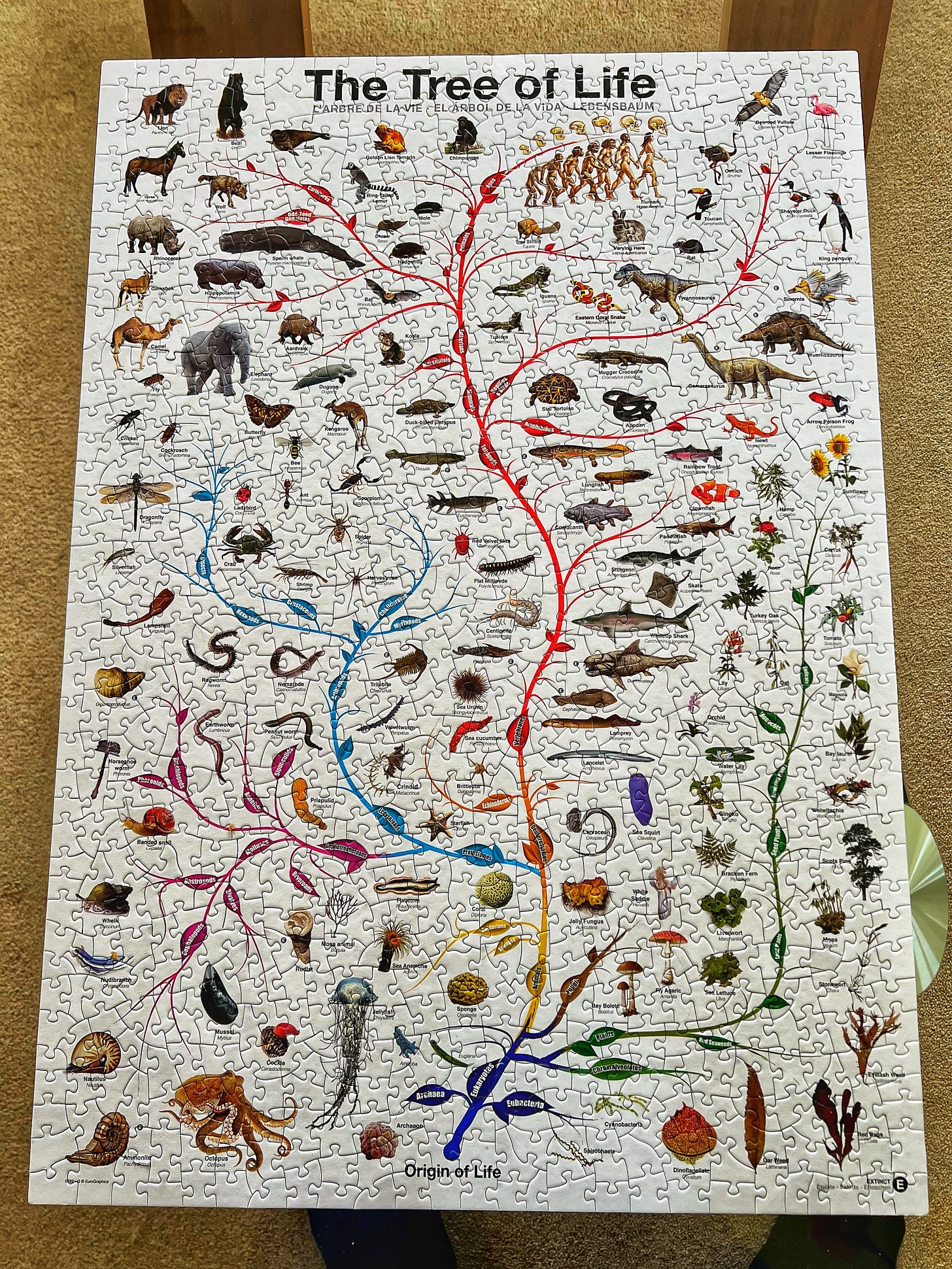

Imagine that each word is a jigsaw piece and that the picture on the front is the overall meaning of the writing. The meaning of each word is encoded and held in the part of the picture it represents. Take, for example, this jigsaw in front of me now called "the Tree of Life". The image represents species' evolution, interconnectedness, and life's lineage on Earth.

For a human, putting this jigsaw together is to understand how that lineage works, the meaning of the image on a piece. Dinosaurs will go together; parts of dinosaurs arrayed across multiple pieces make up the concept of a dinosaur. Roots will go at the bottom, humans at the top, and plants will not be on the same branches as fish but will connect somewhere in their shared ancestry. For a human, completing this jigsaw is about, at least partially, the use of knowledge; knowledge of the meaning encoded in the image. Even when a human uses the shapes alone to find a space, the satisfaction that this space is indeed the correct one comes solely from the person's knowledge of the subject, "Oh! Yes, the snake is made up with this piece", or perhaps their knowledge of the completed image traditionally shown on the box, "Ah! Here, see - the snake is up on this side; here we go..."

This is different from how LLMs do it.

Keep reading with a 7-day free trial

Subscribe to Just A Simulation to keep reading this post and get 7 days of free access to the full post archives.